Chat GPT helps out with my panels at Balticon

I decided to ask Chat GPT to do some research for my panels at Balticon. It actually did a pretty good job, although being somewhat aware of its limits/strengths I was careful to phrase the prompts appropriately. The headlines are the prompts I gave ChatGPT; the text is its response. The prompts are taken from the panel titles, but modified a bit. Note there was a certain amount of finger-wagging from Chat-GPT when we got to space piracy!

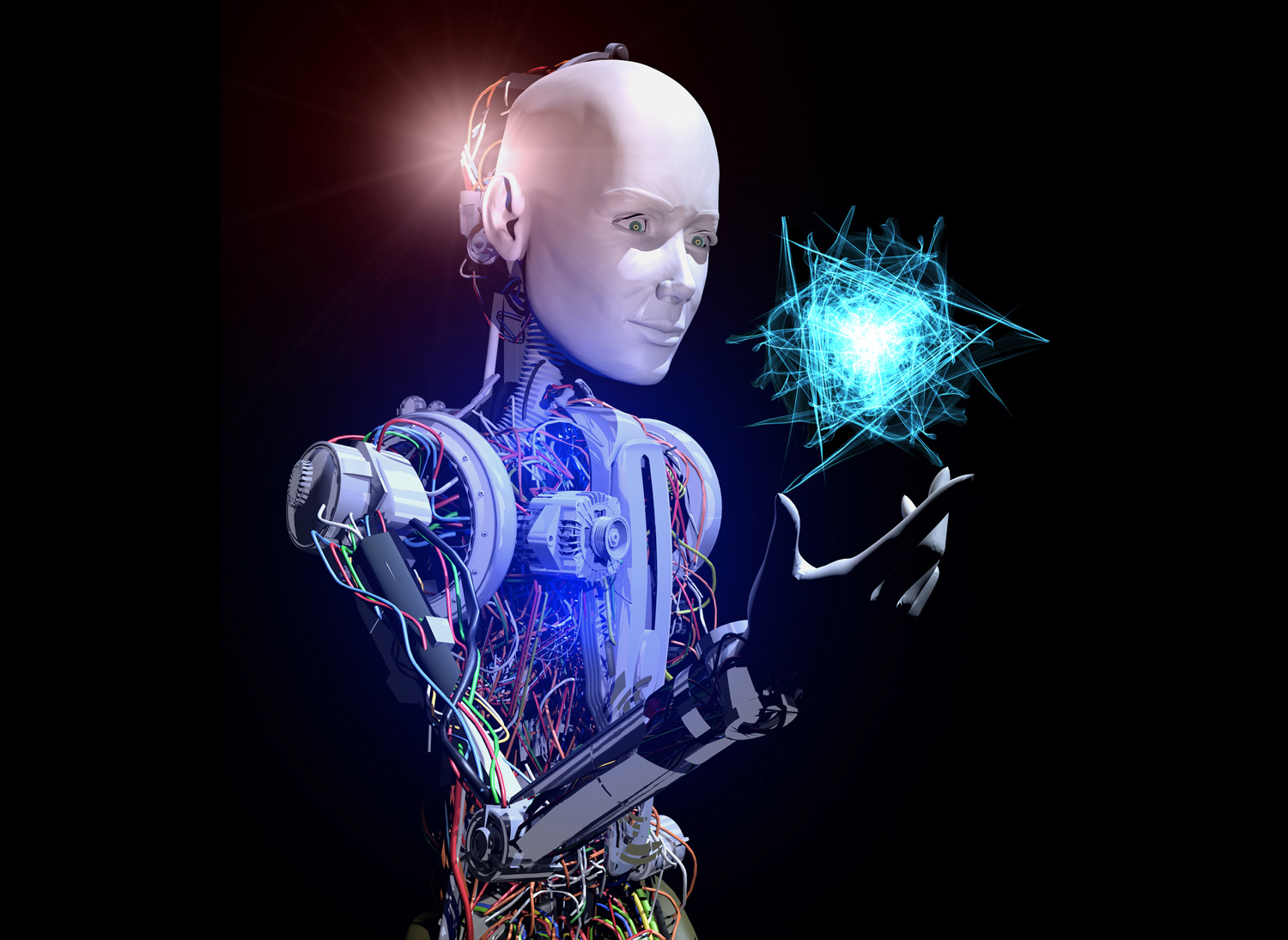

How will generative AIs transform the world?

Generative AIs, such as language models, have the potential to profoundly transform the world across various domains. Here are several ways in which generative AIs may have a significant impact:

- Content Generation: Generative AIs can automate the creation of content across various mediums, including text, images, music, and video. They can assist in writing articles, generating marketing materials, designing visuals, composing music, or even creating realistic deepfake videos. This has implications for industries such as media, advertising, entertainment, and creative arts.

- Personalized Experiences: Generative AIs can tailor experiences to individual preferences and needs. They can help create personalized recommendations for products, services, and content based on user data and preferences. This can enhance user experiences in areas such as e-commerce, entertainment, education, and healthcare.

- Natural Language Processing: Language models and generative AIs can enhance natural language understanding and generation, leading to improved chatbots, virtual assistants, and customer support systems. They can enable more sophisticated conversational interactions, automate customer service processes, and assist in language translation.

- Research and Development: Generative AIs can accelerate research and development in various fields by assisting with data analysis, hypothesis generation, and experimental design. They can help scientists, engineers, and researchers process vast amounts of data, explore new ideas, and make discoveries more efficiently.

- Creative Collaboration: Generative AIs can facilitate creative collaboration between humans and machines. They can assist artists, designers, and writers by generating ideas, providing creative suggestions, or acting as co-creators. This opens up possibilities for new forms of artistic expression and collaboration.

- Decision-Making Support: Generative AIs can assist in decision-making processes by providing insights, analyzing complex data sets, and simulating scenarios. They can aid in strategic planning, financial analysis, risk assessment, and policy development, helping individuals and organizations make more informed decisions.

- Humanitarian Applications: Generative AIs can be utilized in humanitarian efforts, such as disaster response, public health, and poverty alleviation. They can assist in analyzing large-scale data to identify patterns, predict outcomes, and provide insights for effective interventions and resource allocation.

- Ethical Considerations: The emergence of generative AIs raises important ethical considerations. Issues such as bias in training data, privacy concerns, and the responsible use of AI-generated content need to be carefully addressed to ensure that the transformative potential of generative AIs is harnessed responsibly and ethically.

It’s important to note that while generative AIs have immense potential, they also come with challenges and risks. Ensuring responsible development, addressing biases, protecting privacy, and upholding ethical standards are crucial to harnessing the transformative power of generative AIs for the benefit of society.

How might the moral systems of alien civilitizations differ from our own?

When contemplating the moral systems of alien civilizations, we can only engage in speculative and imaginative thinking since we have no empirical knowledge of extraterrestrial life. However, considering the potential diversity of life forms and their unique environments, we can explore some ways in which their moral systems might differ from our own:

- Different Biological Basis: Aliens may have different sensory perceptions, cognitive abilities, and biological needs. These variations could shape their moral systems. For example, if an alien species lacks empathy or emotions as we understand them, their moral framework may not prioritize concepts like compassion or altruism.

- Cultural Relativism: Alien civilizations might possess vastly different cultures and value systems due to their unique evolutionary paths and social structures. Cultural relativism could lead to moral values that diverge significantly from ours, making it challenging for us to comprehend or relate to their ethical perspectives.

- Different Notions of Personhood: Aliens may have distinct concepts of personhood and the boundaries of moral consideration. They might have different criteria for determining moral worth, such as intelligence, consciousness, or other characteristics that we might not prioritize in the same way. This could lead to different ethical obligations and attitudes towards other beings.

- Non-Human-Centric Ethics: Earthly moral systems are often anthropocentric, prioritizing the interests and well-being of humans. Alien civilizations, especially those with more advanced intelligence, may have expanded moral considerations beyond their own species. They could extend moral consideration to other sentient beings, ecosystems, or even artificial intelligences they have created.

- Different Ethical Frameworks: Alien moral systems might be guided by alternative ethical frameworks that we haven’t conceived of or that differ significantly from our own. They might emphasize different ethical principles or philosophical perspectives that challenge or supplement our understanding of right and wrong.

- Alternative Priorities: The values and priorities of alien civilizations could be shaped by different challenges and environmental factors. They might prioritize sustainability, harmony with their ecosystems, or other objectives that differ from human-centric moral concerns.

- Technological Impact: Highly advanced alien civilizations might have overcome certain ethical dilemmas or developed unique moral perspectives based on their technological capabilities and experiences. For example, they might have resolved issues related to resource scarcity, population control, or interstellar travel, which could influence their ethical priorities.

It is important to approach these speculations with humility and an understanding that they are purely imaginative. Without concrete knowledge of extraterrestrial life, we can only engage in thought experiments and explore the potential diversity of moral systems that could exist in the vastness of the universe.

What are some famous mad scientists?

Throughout history, there have been several figures who have been portrayed or labeled as “mad scientists” due to their eccentricities, unorthodox experiments, or controversial beliefs. While the term “mad scientist” is fictional and not a formal designation, here are a few famous figures who have often been associated with the concept:

- Nikola Tesla: Tesla was an electrical engineer and inventor known for his groundbreaking work in alternating current (AC) power systems. He had an unconventional personality and ideas, often conducting experiments that were ahead of his time. Tesla’s eccentricities and claims, such as his ideas on wireless transmission of energy, contributed to the perception of him as a “mad scientist” in popular culture.

- Dr. Victor Frankenstein: Dr. Frankenstein is a fictional character created by Mary Shelley in her novel “Frankenstein.” He is portrayed as a scientist who creates a living creature through a controversial and unorthodox scientific experiment. Dr. Frankenstein’s pursuit of knowledge and his reckless actions have made him an iconic representation of the “mad scientist” archetype.

- Dr. Jekyll and Mr. Hyde: These characters, created by Robert Louis Stevenson in the novella “Strange Case of Dr. Jekyll and Mr. Hyde,” depict a scientist named Dr. Henry Jekyll, who creates a potion that transforms him into the evil Mr. Edward Hyde. Dr. Jekyll’s attempts to separate his good and evil sides through scientific means result in disastrous consequences and exemplify the dangers of unchecked experimentation.

- Dr. Moreau: Dr. Moreau is a character from H.G. Wells’ novel “The Island of Doctor Moreau.” He is a vivisectionist who conducts unethical experiments on animals, attempting to create human-like hybrids. Dr. Moreau’s disregard for ethical boundaries and his disturbing creations have contributed to his portrayal as a “mad scientist.”

- Dr. Emmett Brown: Dr. Brown is a fictional character from the “Back to the Future” film trilogy, portrayed by Christopher Lloyd. He is an eccentric inventor who creates a time machine out of a DeLorean car. Driven by his scientific pursuits and often engaging in unconventional experiments, Dr. Brown embodies the archetypal “mad scientist” persona in a lighthearted and comedic manner.

It’s important to note that while these figures have become iconic representations of “mad scientists,” their portrayals are fictional and should not be confused with real-life scientists or their work. The term “mad scientist” is largely a literary and cinematic trope used to depict characters with eccentric, morally ambiguous, or ethically questionable scientific pursuits.

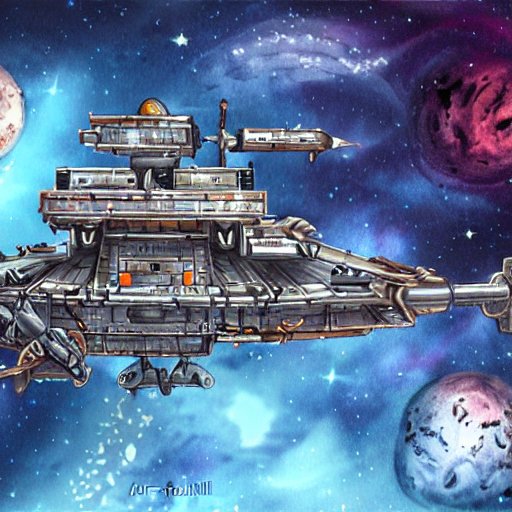

What are the economics of space piracy?

Space piracy, as a concept, involves acts of unauthorized aggression, theft, or disruption of space activities. While space piracy is primarily portrayed in science fiction, it is essential to recognize that real-world space activities are governed by international laws and treaties, and piracy is illegal and highly discouraged. Nevertheless, if we were to consider the hypothetical economics of space piracy in a fictional context, we can examine several factors:

- Motivation: Space piracy would likely be driven by economic motives. Pirates might aim to steal valuable resources, such as rare minerals, precious metals, or advanced technology from spacecraft or space stations. These stolen goods could be sold on black markets or to rogue entities for substantial profits.

- Cost of Operation: Space piracy would require significant financial resources. Pirates would need to acquire or build spacecraft capable of interstellar travel, equip themselves with advanced weaponry, establish bases or hideouts, and sustain their operations, including crew salaries, maintenance, and fuel costs. Funding such operations could come from illicit activities, extortion, or support from criminal organizations.

- Risk and Competition: Space piracy would be an extremely high-risk endeavor. Pirates would face potential confrontation with well-equipped defense forces, including national militaries or private security employed by space-faring organizations. The risk of retaliation and countermeasures would increase the cost and complexity of maintaining piracy operations. Additionally, competition among pirate factions might lead to conflicts over limited resources and territories, further straining profitability.

- Legal Framework: As mentioned, space activities are governed by international laws and treaties, such as the Outer Space Treaty and the Moon Agreement. These agreements establish principles of peaceful use of space, cooperation, and non-appropriation of celestial bodies. Engaging in space piracy would violate these legal frameworks, potentially leading to sanctions, legal actions, and international condemnation.

- Response and Defense: Space-faring nations and organizations would likely invest in robust security measures to protect their assets from piracy. This could involve employing armed security personnel, developing advanced surveillance systems, and implementing secure communication networks. The cost of defense and security could increase as a response to piracy threats, making it more challenging for pirates to succeed.

Considering the factors above, the economics of space piracy would likely be challenging. The risks, high costs of operation, legal implications, and the potential for strong defense measures by space-faring entities would likely limit the profitability and sustainability of space piracy activities. It is important to reiterate that space piracy is illegal, and international efforts are in place to ensure peaceful and cooperative exploration and utilization of space.

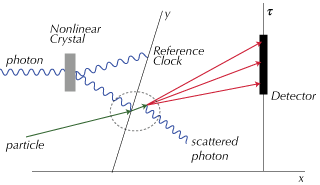

What are some good uses for acousto-optic devices?

Acousto-optic devices are versatile tools that harness the interaction of sound waves and light waves to enable a range of applications. Here are some good uses for acousto-optic devices:

- Laser Beam Control: Acousto-optic devices can be employed for precise laser beam manipulation. By applying an acoustic wave to a crystal or an optical fiber, the device can diffract, deflect, or modulate the laser beam’s intensity, frequency, or direction. This capability is valuable in laser scanning systems, laser printers, laser machining, and optical communication networks.

- Optical Spectroscopy: Acousto-optic devices find extensive applications in spectroscopy, where they facilitate the rapid tuning of light sources and the modulation of optical signals. They are particularly useful in time-resolved spectroscopic techniques, such as Raman spectroscopy, where they enable efficient filtering, wavelength selection, and signal modulation.

- Optical Signal Processing: Acousto-optic devices are utilized in various signal processing applications, including signal modulation, filtering, and frequency shifting. They can perform tasks like frequency analysis, convolution, correlation, and beam steering, making them valuable in areas such as radar systems, optical computing, and telecommunications.

- Fiber Optic Communications: Acousto-optic devices play a role in fiber optic communication networks. They can be employed as tunable filters, optical switches, and modulators to control the routing and modulation of optical signals within fiber optic systems. This enables wavelength division multiplexing, signal demultiplexing, and efficient signal processing in high-speed communication networks.

- Imaging and Holography: Acousto-optic devices are utilized in imaging systems and holography setups. They can control the properties of light, such as intensity, polarization, and phase, allowing for dynamic image formation, spatial light modulation, and holographic recording. These devices contribute to applications like laser displays, laser printing, and three-dimensional imaging.

- Laser Doppler Velocimetry: Acousto-optic devices are used in laser Doppler velocimetry systems to measure the velocity and flow characteristics of fluids. By splitting laser light into two beams and analyzing the Doppler shift caused by the moving particles in the fluid, these devices enable precise velocity measurements, making them valuable in fields such as fluid dynamics, biomedical research, and industrial flow monitoring.

- Optical Computing: Acousto-optic devices have been explored for their potential in optical computing systems. They can be used for optical switching, routing, and signal processing in all-optical computing architectures, offering the advantages of parallelism, high-speed operation, and potentially low power consumption.

These are just a few examples of the many good uses for acousto-optic devices. Their ability to control light using sound waves opens up possibilities in various fields, including photonics, telecommunications, imaging, and scientific research.